Introduction

Audio classification, the process of assigning sound clips to predefined categories is at the forefront of modern technology and is revolutionizing various industries. From smart security systems that detect alarm sounds in real time to automotive safety interfaces and advanced healthcare diagnostics, robust audio classification systems provide significant competitive advantages. By converting raw audio into visual representations (such as spectrograms and mel spectrograms) and leveraging deep learning, you can automatically extract intricate, hierarchical features from audio signals. This blog delves into the foundational theories, detailed CNN architectures, state of the art audio processing methods, and data augmentation techniques that power today’s audio classification systems.

Foundations of Deep Learning in Audio

Deep learning employs multilayer neural networks to learn abstract representations directly from data. In audio processing, raw waveforms are often converted into visual representations such as spectrograms or mel spectrograms. A typical deep learning model for audio comprises:

- Input Layer: Processes raw or preprocessed data (e.g., spectrogram images).

- Hidden Layers: Perform weighted linear combinations and apply nonlinear activations (such as ReLU) to extract progressively abstract features.

- Output Layer: Uses softmax activation for multiclass classification, providing a probability distribution over target categories.

Audio Processing Techniques

Before analysis, raw audio signals must be transformed into formats suitable for deep learning:

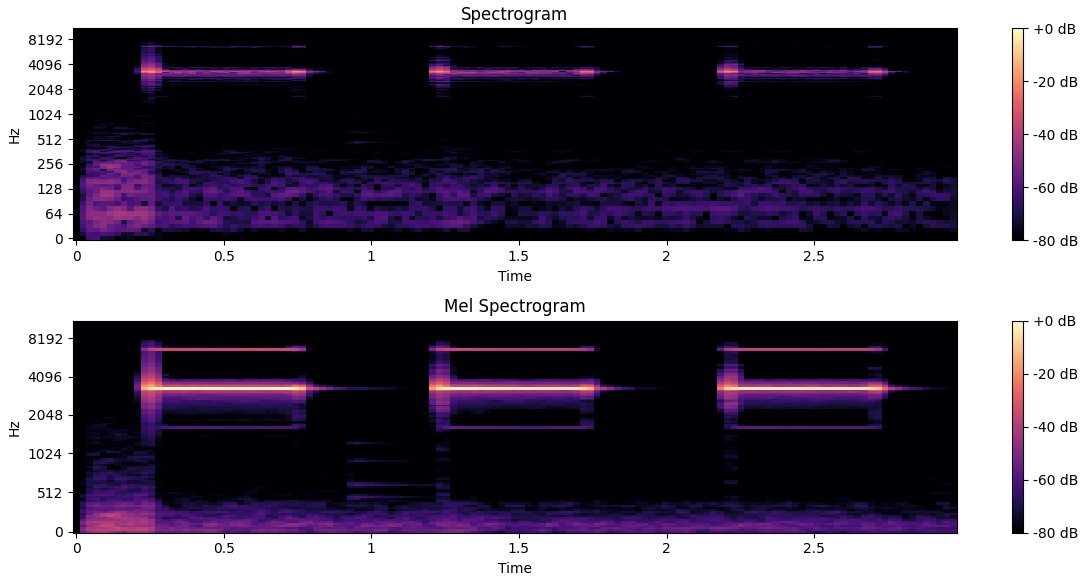

- Spectrograms: Generated using the Short Time Fourier Transform (STFT), spectrograms visually map frequency intensities over time.

- Mel-Spectrograms: By mapping the frequency axis to the Mel scale (which aligns with human auditory perception), these representations emphasize perceptually significant features.

Spectrogram and Mel spectrogram of an alarm sound

Convolutional Neural Networks (CNNs)

Overview of CNNs

Convolutional Neural Networks (CNNs) are a specialized deep learning architecture known for processing grid-like data structures, such as images or spectrograms. Originally developed for image classification, CNNs have proven exceptionally effective at automatically learning both low level and high level features from data.

Advantages of CNNs

- Automatic Feature Extraction: CNNs learn to detect local time frequency patterns, eliminating the need for manual feature engineering.

- Parameter Efficiency: Through weight sharing, CNNs require fewer parameters than fully connected networks.

- Hierarchical Learning: Early layers capture simple features (such as edges or local frequency patterns), while deeper layers learn increasingly complex abstractions.

CNN Architecture Explained

A robust CNN typically includes:

- Convolutional Layers: Learnable filters (e.g., 3×3 or 5×5) slide over the input (e.g., a spectrogram) to produce feature maps by computing dot products with local receptive fields.

- Activation Functions: Nonlinearities (commonly ReLU) are applied to the convolution output.

- Batch Normalization: Normalizes activations to stabilize and accelerate training.

- Pooling Layers: Reduces spatial dimensions (using methods like max pooling) while emphasizing prominent features.

- Regularization Techniques: Methods such as dropout are applied to prevent overfitting by randomly deactivating a fraction of neurons during training. In addition, L2 regularization (also known as weight decay) penalizes large weights, encouraging the network to learn simpler, more generalizable patterns.

- Fully Connected Layers: After flattening the feature maps, these layers integrate the features and output class probabilities using softmax.

Applying CNNs to Audio Classification

From Audio to CNN: Feature Extraction

To apply CNNs for audio classification, raw audio signals are first converted into two-dimensional representations:

- Transformation: To convert raw audio into a format suitable for CNN processing, we typically use the Short Time Fourier Transform (STFT) to generate a spectrogram. The spectrogram can be passed through a mel filter bank to create a mel spectrogram. For most cases, the mel spectrogram is preferred because it more closely approximates human auditory perception by emphasizing frequency bands that are more relevant to how we hear.

- Input Preparation: These visual representations serve as the input to the CNN, allowing the network to automatically extract meaningful features from the audio data.

CNN Pipeline for Audio Classification

A typical CNN-based audio classification pipeline involves:

- Preprocessing: Convert raw audio into spectrogram or mel spectrogram images.

- Feature Extraction: Pass the spectrogram through several convolutional layers that distill the most relevant features.

- Flattening: Transform the final feature maps into a one-dimensional vector.

- Classification: Use fully connected layers and a softmax output to generate probability distributions for each audio class.

Data Augmentation and Training Strategies

For robust audio classification in real-world conditions, consider the following approaches:

- Data Augmentation:

- Time Stretching: Adjust the speed of the audio while maintaining its pitch.

- Pitch Shifting: Change the pitch to simulate variations in speaker tone or instrument timbre.

- Adding Noise: Introduce background noise to mimic real-world recording environments.

- CNN Architecture Evaluation:

- Experiment with various CNN architectures to identify the optimal balance between efficiency and accuracy.

- Training the CNN:

- Loss Function: Utilize cross entropy for multiclass classification.

- Optimization: Apply optimizers such as Adam or Stochastic Gradient Descent (SGD).

- Evaluation Metrics: Focus on metrics like accuracy, precision, recall, and F1 score to guide improvements.

Final Thoughts

By integrating deep learning with audio processing techniques, CNNs can automatically learn complex patterns from spectrogram images. This approach has led to state of the art performance in audio classification. As research progresses, these systems are set to further advance the field of audio analysis.